Running Jobs: Difference between revisions

| Line 240: | Line 240: | ||

</syntaxhighlight>The above script si prototype and shows how to allocate 24 MPI treads with 12 cores per node. Each MPI tread initiates 2 OMP threads. For actual working script users must add QOS and partition information and adjust their requirements for the memory. | </syntaxhighlight>The above script si prototype and shows how to allocate 24 MPI treads with 12 cores per node. Each MPI tread initiates 2 OMP threads. For actual working script users must add QOS and partition information and adjust their requirements for the memory. | ||

be able to schedule your job for execution and to actually run your job on one or more compute nodes, SLURM needs to be instructed about your job’s parameters. These instructions are typically stored in a “job submit script”. In this section, we describe the information that needs to be included in a job submit script. The submit script typically includes | === GPU jobs === | ||

On arrow each of the nodes has 8 GPU A40 with 80GB on board. To use GPUs in a job users must add the --gres option in SBATH line for cpu resources. The example below demonstrates a GLU enabled SLURM script. '''<u>Users must add lines for QOS and partition as was explained above.</u>''' | |||

<syntaxhighlight lang="abap"> | |||

#!/bin/bash | |||

#SBATCH --job-name=GPU_J # short name for job | |||

#SBATCH --nodes=1 # number of nodes | |||

#SBATCH --ntasks=1 # total number of tasks across all nodes | |||

#SBATCH --cpus-per-task=1 # cpu-cores per task (>1 if multi-threaded tasks) | |||

#SBATCH --mem-per-cpu=16G # memory per cpu-core | |||

#SBATCH --gres=gpu:1 # number of gpus per node max 8 for Arrow | |||

cd $SLURM_WORKDIR | |||

srun ... <code> <args> | |||

</syntaxhighlight> | |||

=== Parametric jobs via Job Array === | |||

Job arrays are used for running the same job a many times with only slightly different parameters. The below script demonstrates how to run such job on Arrow. '''<u>Users must add lines for QOS and partition as was explained above.</u>''' | |||

<syntaxhighlight lang="abap"> | |||

#!/bin/bash | |||

#SBATCH --job-name=Array_J # short name for job | |||

#SBATCH --nodes=1 # node count | |||

#SBATCH --ntasks=1 # total number of tasks across all nodes | |||

#SBATCH --cpus-per-task=1 # cpu-cores per task (>1 if multi-threaded tasks) | |||

#SBATCH --mem-per-cpu=16G # memory per cpu-core | |||

#SBATCH --output=slurm-%A.%a.out # stdout file (standart out) | |||

#SBATCH --error=slurm-%A.%a.err # stderr file (standart error) | |||

#SBATCH --array=0-3 # job array indexes 0, 1, 2, 3 | |||

cd $SLURM_WORKDIR | |||

<executable> | |||

</syntaxhighlight> | |||

be able to schedule your job for execution and to actually run your job on one or more compute nodes, SLURM needs to be instructed about your job’s parameters. These instructions are typically stored in a “job submit script”. In this section, we describe the information that needs to be included in a job submit script. The submit script typically includes | |||

:• job name | :• job name | ||

:• queue name | :• queue name | ||

Revision as of 04:04, 30 June 2023

Running jobs on any HPCC server

Overview

Running jobs on any HPCC production server require 2 steps, On first step the users must prepare:

- Input data file(s) for the job.

- Parameter(s) file(s) for the job (if applicable, could be in subdirectory as well with explicit path);

The files must be placed in users /scratch/<userid> or its subdirectory. Jobs cannot start from home directories /global/u/<user_id>.

On step two the users must do:

- Set up execution environment by loading proper module(s);

- Write the correct job submission script which holds computational parameters of the job (i.e. needed # of cores, amount of memory, run time etc.).

The job submission script must also be placed in /scratch/<userid>

File systems on Penzias, Appel and Karle

These servers use 2 separate file systems /global/u and scratch. Scratch is fast small file system mounted on all nodes. /global/u is large but slower file system which holds all users' home directories (/global/u/<userid>) and is mounted only on login node for these servers. All jobs must start from scratch file system. Jobs cannot be submitted from main file system.

Create and transfer of input/output data and parameters files

The input data and parameter(s) files can be locally generated or directly transferred to /scratch/<userid> using file transfer node (cea) or GlobusOnline. In both cases the HPCC recommends a transfer to user's home directory first to /global/u/<userid> before copy the needed files from user's home to /scratch/<userid> . In addition, these files can be transferred from users' local storage (i.e. local laptop) to DSMS (/global/u/<userid> ) using cea and/or Globus. The submission script must be created with use of Unix/Linux text editor only such as Vi/Vim, Edit, Pico or Nano. MS Windows Word is a word processing system and cannot be used to create the job submission scripts.

File systems on Arrow

Arrow is attached to NSF funded 2 PB global hybrid file system. The latter holds both users' home directories (/global/u/<userid> ) and users' scratch directories (/scratch/<userid>). The underlying files system manipulates the placement of the files to ensure the best possible performance for different file types. It is important to remember that only scratch directories are visible on nodes. Consequently jobs can be submitted only from /scratch/<userid>. directory. Users must preserve valuable files (data, executables, parameters etc) in /global/u/<userid>.

Spawn and transfer of input/output data and parameters files

The Arrow file system is not yet integrated with HPCC file transferring infrastructure and thus the users cannot use GlobusOnline or file transfer node as it described above. The users can only create parameter(s) files and job submission scripts directly in /scratch/<userid> . Input data and other large files must be tunneled to Arrow. users are encouraged to contact HPCC for actual procedure.

Copy or move files between Arrow and other servers

Because Arrow is detached from main HPC infrastructure the user files can only be tunneled to Arrow with use of ssh tunneling mechanism. Users cannot use Globus online and/or Cea to transfer files between new and old file systems, nor they can use Cea and Globus Online to transfer files from their local devices to Arrow's file system. However the use ssh tunneling offers an alternative way to securely transfer files to Arrow over the Internet using ssh protocol and Chizen as a ssh server. Users are encouraged to contact HPCC for further guidance.

Set up execution environment

Overview of LMOD environment modules system

Each of the applications, libraries and executables requires specific environment. In addition many software packages and/or system packages exist in different versions. To ensure proper environment for each and every application, library or system software CUNY-HPCC applies the environment module system which allow quick and easy way to dynamically change user's environment through modules. Each module is a file which describes needed environment for the package.Modulefiles may be shared by all users on a system and users may have their own collection of module files. Note that on old servers (Penzias, Appel) HPCC utilizes TCL based modules management system which has less capabilities than LMOD. On Arrow HPCC uses only LMOD environment. management system. The latter is Lua based and has capabilities to resolve hierarchies. It is important to mentioned that LMOD system understands and accepts the TCL modules Thus user's module existing on Appel or Penzias can be transferred and used directly on Arrow. The LMOD also allows to use shortcuts. In addition users may create collections of modules and store the later under particular name. These collections can be used for "fast load" of needed modules or to supplement or replacement of the shared modulefiles. For instance ml can be used as replacement of command module load.

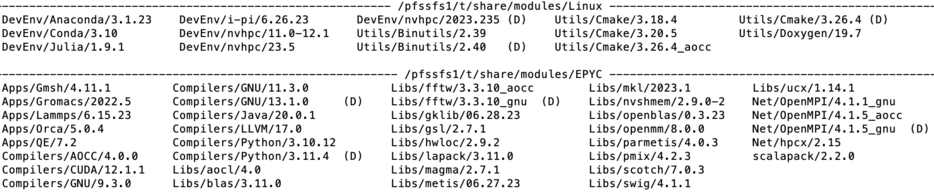

Modules categories

module category Library

Lmod modules are organized in categories. On Arrow the categories are Compilers, Libraries (Libs), Utilities(Util), Applications. Development Environments(DevEnv) and Communication (Net). To check content of each category the users may use the command module category <name of the category>. The picture above shows the output. In addition the version of the product is showed in module file name. Thus the line

Compilers/GNU/13.1.0

shown in EPYC directory denotes the module file for GNU (C/C++/fortran) compiler ver 13.1.0. tuned for AMD architecture.

List of available modules

To get list of available modules the users may use the command

module avail

The output of this command for Arrow server is shown.The (D) after the module's name denotes that this module is default. The (L) denotes that the module is already loaded.

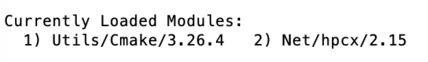

Load module(s) and check for loaded modules

Command module load <name of the module> OR module add<name of the module> loads a requested module. For example the below command load modules for utility cmake and network interface. User may check which modules are already loaded by typing module list. The figure below shows the output of this command

module load Utils/Cmake/3.26.4

module add Net/hpcx/2.15

module list

Another command which is equivalent to module load is module add as it is shown in above example.

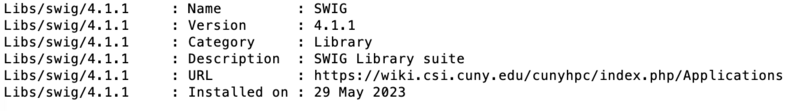

Module details

The information about module is available via whatis command for library swig:

module whatis Libs/swig

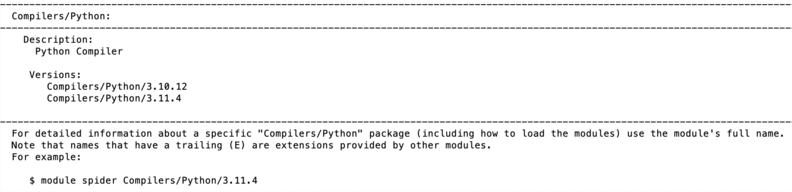

Searching for modules

Modules can be searched by module spider command. For instance the search of Python modules gives the following output:

module spider Python

t Each modulefile holds information needed to configure the shell environment for a specific software application, or to provide access to specific software tools and libraries. Modulefiles may be shared by all users on a system and users may have their own collection of module files. The users' collections may be used for "fast load" of needed modules or to supplement or replace the shared modulefiles.

Batch job submission system

SLURM is open source scheduler and batch system which is implemented at HPCC. SLURM is used on all servers to submit jobs.

SLURM script structure

A Slurm script must do three things:

- prescribe the resource requirements for the job

- set the environment

- specify the work to be carried out in the form of shell commands

The simple SLURM script is given below

#!/bin/bash

#SBATCH --job-name=test_job # some short name for a job

#SBATCH --nodes=1 # node count

#SBATCH --ntasks=1 # total number of tasks across all nodes

#SBATCH --cpus-per-task=1 # cpu-cores per task (>1 if multi-threaded tasks)

#SBATCH --mem-per-cpu=16 # memory per cpu-core

#SBATCH --time=00:10:00 # total run time limit (HH:MM:SS)

#SBATCH --mail-type=begin # send email when job begins

#SBATCH --mail-type=end # send email when job ends

#SBATCH --mail-user=<valid user email>

cd $SLURM_WORKDIR. # change to directory from where jobs starts

The first line of a Slurm script above specifies the Linux/Unix shell to be used. This is followed by a series of #SBATCH directives which set the resource requirements and other parameters of the job. The script above requests 1 CPU-core and 4 GB of memory for 1 minute of run time. Note that #SBATCH is command to SLURM while the # not followed by SBATH is interpret as comment line. Users can submit 2 types of jobs - batch jobs and interactive jobs:

sbatch <name-of-slurm-script> submits job to the scheduler

salloc requests an interactive job on compute node(s) (see below)

Job(s) execution time

The job execution time is sum with them the job waits in SLURM partition (queue) before being executed on node(s) and actual running time on node. For the parallel corder the partition time (time job waits in partition) increases with increasing resources such as number of CPU-cores. On other hand the execution time (time on nodes) decreases with inverse of resources. Each job has its own "sweet spot" which minimizes the time to solution. Users are encouraged to run several test runs and to figure out what amount of asked resources works best for their job(s).

Partitions and quality of service (QOS)

In SLURM terminology partition has a meaning of a queue. Jobs are placed in partition for execution. QOS mechanism to apply policies and thus to control the resources on user lever or on partition level. In particular when applied to partition the QOS allows to create a 'floating' partition - namely a partition that gets all assigned resources (nodes) but allows to run on the number of resources in it. HPCC uses QOS on all partitions on Arrow to set policies on these partitions. Thus the HPCC ensures fair share policy for resources in each partition and controls access to the partitions according to user's status. For instance the QOS establishes that only core members of the NSF grant have access to NSF funded resources listed in partition partnsf (see below) . Currently the partitions Arrow are:

| Partition | Nodes | Allowed Users | Partition limitations |

|---|---|---|---|

| partnsf | n130,n131 | All registered core participants of NSF grant | 128cores/240h per job |

| partchem | n133 | All registered users from Prof. S.Loverde Group | no limits |

| partmath | n136,n137 | All registered users from Prof. A.Poje and A.Kuklov | no limits |

Note that users can submit job only to partition they are registered to e.g. jobs from users registered to partnsf will be rejected on other partitions (and vice versa).

Working with QOS and partitions on Arrow

Every job submission script on Arrow must hold proper description of QOS and partition. For instance all jobs intended to use n133 must have the following lines:

#SBATCH --qos=qoschem

#SBATCH --partition partchem

In similar way all jobs intended to use n130 and n131 must have in their job submission script:

#SBATCH --qos=qosnsf

#SBATCH --partition partnsf

Note that Penzias do not use QOS. Thus users must adapt scripts they copy from Penzias server to match QOS requirements on Arrow.

Submitting serial (sequential jobs)

These jobs utilize only a single CPU-core. Below is a sample Slurm script for a serial job in partition partchem. Users must add lines for QOS and partition as was explained above. :

#!/bin/bash

#SBATCH --job-name=serial_job # short name for job

#SBATCH --nodes=1 # node count always 1

#SBATCH --ntasks=1 # total number of tasks aways 1

#SBATCH --cpus-per-task=1 # cpu-cores per task (>1 if multi-threaded tasks)

#SBATCH --mem-per-cpu=8G # memory per cpu-core

#SBATCH --qos=qoschem

#SBATCH --partition partchem

cd $SLURM_WORKDIR

srun ./myjob

In above script requested resources are:

- --nodes=1 - specify one node

- --ntasks=1 - claim one task (by default 1 per CPU-core)

Job can be submitted for execution with command:

sbatch <name of the SLURM script>

For instance if the above script is saved in file named serial_j.sh the command will be:

sbatch serial_j.sh

Submitting multithread job

Some software like MATLAB or GROMACS are able to use multiple CPU-cores using shared-memory parallel programming models like OpenMP, pthreads or Intel Threading Building Blocks (TBB). OpenMP programs, for instance, run as multiple "threads" on a single node with each thread using one CPU-core. The example below show how run thread-parallel on Arrow. Users must add lines for QOS and partition as was explained above.

#!/bin/bash

#SBATCH --job-name=multithread # create a short name for your job

#SBATCH --nodes=1 # node count

#SBATCH --ntasks=1 # total number of tasks across all nodes

#SBATCH --cpus-per-task=4 # cpu-cores per task (>1 if multi-threaded tasks)

#SBATCH --mem-per-cpu=4G # memory per cpu-core (4G is default)

export OMP_NUM_THREADS=$SLURM_CPUS_PER_TASK

In this script the the cpus-per-task is mandatory so SLURM can run the multithreaded task using four CPU-cores. Correct choice of cpus-per-task is very important because typically the increase of this parameter decreases in execution time but increases waiting time in partition(queue). In addition these type of jobs rarely scale well beyond 16 cores. However the optimal value of cpus-per-task must be determined empirically by conducting several test runs. It is important to remember that the code must be 1. muttered code and 2. be compiled with multithread option for instance -fomp flag in GNU compiler.

Submitting distributed parallel job

These jobs use Message Passing Interface to realize the distributed-memory parallelism across several nodes. The script below demonstrates how to run MPI parallel job on Arrow. Users must add lines for QOS and partition as was explained above.

#!/bin/bash

#SBATCH --job-name=MPI_job # short name for job

#SBATCH --nodes=2 # node count

#SBATCH --ntasks-per-node=32 # number of tasks per node

#SBATCH --cpus-per-task=1 # cpu-cores per task (>1 if multi-threaded tasks)

#SBATCH --mem-per-cpu=16G # memory per cpu-core

cd $SLURM_WORKDIR

srun ./mycode <args>. # mycode is in local directory. For other places provider full path

The above script can be easily modified for hybrid (OpenMP+MPI) by changing the cpu-per-task parameter. The optimal value of --nodes and --ntasks for a given code must be determined empirically with several test runs. In order to decrease communication the users shown try to run large jobs by taking the whole node rather than 2 chunks from 2 (or more nodes). For instance a large MPI job with 128 cores is better to be run on single node rather than on two times 64 cores from 2 nodes. To achieve that users may use the following SLURM prototype script:

#!/bin/bash

#SBATCH --job-name MPI_J_2

#SBATCH --nodes 1

#SBATCH --ntasks 128 # total number of tasks

#SBATCH --mem 40G # total memory per job

#SBATCH --qos=qoschem

#SBATCH --partition partchem

cd $SLURM_WORKDIR

srun ...

In above script the requested resources are 128 cores on one node. Note that unused memory on this node will not be accessible to other jobs. In difference to previous script the memory is referred as total memory for a job via parameter --mem.

Submitting Hybrid (OMP+MPI) job on Arrow

#!/bin/bash

#SBATCH --job-name=OMP_MPI # name of the job

#SBATCH --ntasks=24 # total number of tasks aka total # of MPI processes

#SBATCH --nodes=2 # total number of nodes

#SBATCH --tasks-per-node=12 # number of tasks per node

#SBATCH --cpus-per-task=2 # number of OMP threads per MPI process

#SBATCH --mem-per-cpu=16G # memory per cpu-core

#SBATCH --partition=partnsf

#SBATCH --qos=qosnsf

cd $SLURM_WORKINGDIR

export OMP_NUM_THREADS=$SLURM_CPUS_PER_TASK

export SRUN_CPUS_PER_TASK=$SLURM_CPUS_PER_TASK

srun ...

The above script si prototype and shows how to allocate 24 MPI treads with 12 cores per node. Each MPI tread initiates 2 OMP threads. For actual working script users must add QOS and partition information and adjust their requirements for the memory.

GPU jobs

On arrow each of the nodes has 8 GPU A40 with 80GB on board. To use GPUs in a job users must add the --gres option in SBATH line for cpu resources. The example below demonstrates a GLU enabled SLURM script. Users must add lines for QOS and partition as was explained above.

#!/bin/bash

#SBATCH --job-name=GPU_J # short name for job

#SBATCH --nodes=1 # number of nodes

#SBATCH --ntasks=1 # total number of tasks across all nodes

#SBATCH --cpus-per-task=1 # cpu-cores per task (>1 if multi-threaded tasks)

#SBATCH --mem-per-cpu=16G # memory per cpu-core

#SBATCH --gres=gpu:1 # number of gpus per node max 8 for Arrow

cd $SLURM_WORKDIR

srun ... <code> <args>

Parametric jobs via Job Array

Job arrays are used for running the same job a many times with only slightly different parameters. The below script demonstrates how to run such job on Arrow. Users must add lines for QOS and partition as was explained above.

#!/bin/bash

#SBATCH --job-name=Array_J # short name for job

#SBATCH --nodes=1 # node count

#SBATCH --ntasks=1 # total number of tasks across all nodes

#SBATCH --cpus-per-task=1 # cpu-cores per task (>1 if multi-threaded tasks)

#SBATCH --mem-per-cpu=16G # memory per cpu-core

#SBATCH --output=slurm-%A.%a.out # stdout file (standart out)

#SBATCH --error=slurm-%A.%a.err # stderr file (standart error)

#SBATCH --array=0-3 # job array indexes 0, 1, 2, 3

cd $SLURM_WORKDIR

<executable>

be able to schedule your job for execution and to actually run your job on one or more compute nodes, SLURM needs to be instructed about your job’s parameters. These instructions are typically stored in a “job submit script”. In this section, we describe the information that needs to be included in a job submit script. The submit script typically includes

- • job name

- • queue name

- • what compute resources (number of nodes, number of cores and the amount of memory, the amount of local scratch disk storage (applies to Andy, Herbert, and Penzias), and the number of GPUs) or other resources a job will need

- • packing option

- • actual commands that need to be executed (binary that needs to be run, input\output redirection, etc.).

A pro forma job submit script is provided below.

#!/bin/bash #SBATCH --partition <queue_name> #SBATCH -J <job_name> #SBATCH --mem <????> # change to the working directory cd $SLURM_WORKDIR echo ">>>> Begin <job_name>" # actual binary (with IO redirections) and required input # parameters is called in the next line mpirun -np <cpus> <Program Name> <input_text_file> > <output_file_name> 2>&1

Note: The #SLURM string must precede every SLURM parameter.

# symbol in the beginning of any other line designates a comment line which is ignored by SLURM

Explanation of SLURM attributes and parameters:

- --partition <queue_name> Available main queue is “production” unless otherwise instructed.

- • “production” is the normal queue for processing your work on Penzias.

- • “development” is used when you are testing an application. Jobs submitted to this queue can not request more than 8 cores or use more than 1 hour of total CPU time. If the job exceeds these parameters, it will be automatically killed. “Development” queue has higher priority and thus jobs in this queue have shorter wait time.

- • “interactive” is used for quick interactive tests. Jobs submitted into this queue run in an interactive terminal session on one of the compute nodes. They can not use more than 4 cores or use more than a total of 15 minutes of compute time.

- -J <job_name> The user must assign a name to each job they run. Names can be up to 15 alphanumeric characters in length.

- --ntasks=<cpus> The number of cpus (or cores) that the user wants to use.

- • Note: SLURM refers to “cores” as “cpus”; currently HPCC clusters maps one thread per one core.

- --mem <mem> This parameter is required. It specifies how much memory is needed per job.

- --gres <gpu:2> The number of graphics processing units that the user wants to use on a node (This parameter is only available on PENZIAS).

gpu:2 denotes requesting 2 GPU's.

Special note for MPI users

Parameters are defined can significantly affect the run time of a job. For example, assume you need to run a job that requires 64 cores. This can be scheduled in a number of different ways. For example,

#SBATCH --nodes 8 #SBATCH --ntasks 64

will freely place the 8 job chunks on any nodes that have 8 cpus available. While this may minimize communications overhead in your MPI job, SLURM will not schedule this job until 8 nodes each with 8 free cpus becomes available. Consequently, the job may wait longer in the input queue before going into execution.

#SBATCH --nodes 32 #SBATCH --ntasks 2

will freely place 32 chunks of 2 cores each. There will possibly be some nodes with 4 free chunks (and 8 cores) and there may be nodes with only 1 free chunk (and 2 cores). In this case, the job ends up being more sparsely distributed across the system and hence the total averaged latency may be larger then in case with nodes 8, ntasks 64

mpirun -np <total tasks or total cpus>. This script line is only to be used for MPI jobs and defines the total number of cores required for the parallel MPI job.

The Table 2 below shows the maximum values of the various SLURM parameters by system. Request only the resources you need as requesting maximal resources will delay your job.

Serial Jobs

For serial jobs, --nodes 1 and --ntasks 1 should be used.

#!/bin/bash # # Typical job script to run a serial job in the production queue # #SBATCH --partition production #SBATCH -J <job_name> #SBATCH --nodes 1 #SBATCH --ntasks 1 # Change to working directory cd $SLURM_SUBMIT_DIR # Run my serial job </path/to/your_binary> > <my_output> 2>&1

OpenMP and Threaded Parallel jobs

OpenMP jobs can only run on a single virtual node. Therefore, for OpenMP jobs, place=pack and select=1 should be used; ncpus should be set to [2, 3, 4,… n] where <n must be less than or equal to the number of cores on a virtual compute node.

Typically, OpenMP jobs will use the <mem> parameter and may request up to all the available memory on a node.

#!/bin/bash

#SBATCH -J Job_name

#SBATCH --partition production

#SBATCH --ntasks 1

#SBATCH --nodes 1

#SBATCH --mem=<mem>

#SBATCH -c 4

# Set OMP_NUM_THREADS to the same value as -c

# with a fallback in case it isn't set.

# SLURM_CPUS_PER_TASK is set to the value of -c, but only if -c is explicitly set

omp_threads=1

if [ -n "$SLURM_CPUS_PER_TASK" ];

omp_threads=$SLURM_CPUS_PER_TASK

else

omp_threads=1

fi

mpirun -np </path/to/your_binary> > <my_output> 2>&1

mpirun -np 16 </path/to/your_binary> > <my_output> 2>&1

MPI Distributed Memory Parallel Jobs

For an MPI job, select= and ncpus= can be one or more, with np= >/=1.

#!/bin/bash # # Typical job script to run a distributed memory MPI job in the production queue requesting 16 cores in 16 nodes. # #SBATCH --partition production #SBATCH -J <job_name> #SBATCH --ntasks 16 #SBATCH --nodes 16 #SBATCH --mem=<mem>

# Change to working directory cd $SLURM_SUBMIT_DIR # Run my 16-core MPI job mpirun -np 16 </path/to/your_binary> > <my_output> 2>&1

GPU-Accelerated Data Parallel Jobs

#!/bin/bash # # Typical job script to run a 1 CPU, 1 GPU batch job in the production queue # #SBATCH --partition production #SBATCH -J <job_name> #SBATCH --ntasks l #SBATCH --gres gpu:1 #SBATCH --mem <fond color="red"><mem></fond color>

# Find out which compute node the job is using hostname # Change to working directory cd $SLURM_SUBMIT_DIR # Run my GPU job on a single node using 1 CPU and 1 GPU. </path/to/your_binary> > <my_output> 2>&1

Submitting jobs for execution

NOTE: We do not allow users to run any production job on the login-node. It is acceptable to do short compiles on the login node, but all other jobs must be run by handing off the “job submit script” to SLURM running on the head-node. SLURM will then allocate resources on the compute-nodes for execution of the job.

The command to submit your “job submit script” (<job.script>) is:

sbatch <job.script>

This section in in development

Saving output files and clean-up

Normally you expect certain data in the output files as a result of a job. There are a number of things that you may want to do with these files:

- • Check the content of these outputs and discard them. In such case you can simply delete all unwanted data with rm command.

- • Move output files to your local workstation. You can use scp for small amounts of data and/or GlobusOnline for larger data transfers.

- • You may also want to store the outputs at the HPCC resources. In this case you can either move your outputs to /global/u or to SR1 storage resource.

In all cases your /scratch/<userid> directory is expected to be empty. Output files stored inside /scratch/<userid> can be purged at any moment (except for files that are currently being used in active jobs) located under the /scratch/<userid>/<job_name> directory.