File Transfers: Difference between revisions

No edit summary |

|||

| Line 7: | Line 7: | ||

:[https://cunyhpc.csi.cuny.edu/zircon_d8/sites/default/files/Globus_Instructions.pdf Details on connecting via Globus can be found here.] | :[https://cunyhpc.csi.cuny.edu/zircon_d8/sites/default/files/Globus_Instructions.pdf Details on connecting via Globus can be found here.] | ||

:• '''cea.csi.cuny.edu''': Only Secure FTP (SFTP) can be used to transfer files to cea.csi.cuny.edu. Each of the servers is mounted on cea under its own name. Thus the users could place their files on particular server simply by preceding /scratch with /<name of the server> e.g. '''sftp> put TEST.txt /penzias/scratch/john.doe/TEST2.txt''' | :• '''cea.csi.cuny.edu''': Only Secure FTP (SFTP) can be used to transfer files to cea.csi.cuny.edu. Each of the servers is mounted on cea under its own name. Thus the users could place their files on particular server simply by preceding /scratch with /<name of the server> e.g. '''sftp> put TEST.txt /penzias/scratch/john.doe/TEST2.txt''' | ||

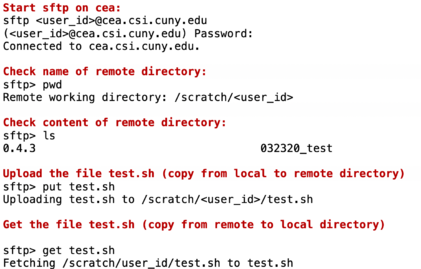

:• '''iRODS''': The data grid/data management tool provided by CUNY HPC Center for accessing the '''SR1''' resource. iRODS clients ('''<font face="courier">iput, iget, irsync</font>''') provide a data transfer mechanism featuring bulk upload and parallel streams. | :[[File:SFTP example.png|left|thumb|421x421px|Use sftp to transfer files to/from Penzias file systems]]• '''iRODS''': The data grid/data management tool provided by CUNY HPC Center for accessing the '''SR1''' resource. iRODS clients ('''<font face="courier">iput, iget, irsync</font>''') provide a data transfer mechanism featuring bulk upload and parallel streams. | ||

=== <u>Arrow cluster and NSF storage</u> === | === <u>Arrow cluster and NSF storage</u> === | ||

For the first project year the storage purchased with NSF grant and computational nodes will be accessible only by the core members of the NSF grant. For that time the acquired storage system will not be applied as main storage for HPCC and consequently above explained mechanisms cannot be used. The users of that resource should consult HPCC about possible methods to transfer files to this storage by tunneling via HPCC gateway. | For the first project year the storage purchased with NSF grant and computational nodes will be accessible only by the core members of the NSF grant. For that time the acquired storage system will not be applied as main storage for HPCC and consequently above explained mechanisms cannot be used. The users of that resource should consult HPCC about possible methods to transfer files to this storage by tunneling via HPCC gateway. | ||

Revision as of 18:42, 18 July 2023

There are several methods for transferring files to HPCC depend on tier and consequently file system used.

Basic and advanced tier file transfer

Basic and advanced tiers are attached to DSMS file system as it described in figure 1. above. The users utilizing these resources may transfer files via Globus online or via Cea - the file transfer node as it is described below In addition the users of these tiers may use iRODS data management system as described below.

- • Globus Online: The preferred method for large files, with extra features for parallel data streams, auto-tuning and auto-fault recovery. Globus online is to transfer files between systems—between the CUNY HPC Center resources and XSEDE facilities, or even users’ desktops. A typical transfer rate ranges from 100 to 400 Mbps.

- Details on connecting via Globus can be found here.

- • cea.csi.cuny.edu: Only Secure FTP (SFTP) can be used to transfer files to cea.csi.cuny.edu. Each of the servers is mounted on cea under its own name. Thus the users could place their files on particular server simply by preceding /scratch with /<name of the server> e.g. sftp> put TEST.txt /penzias/scratch/john.doe/TEST2.txt

- • iRODS: The data grid/data management tool provided by CUNY HPC Center for accessing the SR1 resource. iRODS clients (iput, iget, irsync) provide a data transfer mechanism featuring bulk upload and parallel streams.

Arrow cluster and NSF storage

For the first project year the storage purchased with NSF grant and computational nodes will be accessible only by the core members of the NSF grant. For that time the acquired storage system will not be applied as main storage for HPCC and consequently above explained mechanisms cannot be used. The users of that resource should consult HPCC about possible methods to transfer files to this storage by tunneling via HPCC gateway.