Main Page: Difference between revisions

| Line 52: | Line 52: | ||

|Penzias | |Penzias | ||

|FT | |FT | ||

| | |Lustre FS | ||

| | |GPFS FS (GS) | ||

|60 | |60 | ||

|16 | |16 | ||

| Line 64: | Line 64: | ||

|Blue Moon | |Blue Moon | ||

|AT | |AT | ||

| | |Lustre FS | ||

| | |GPFS FS (GS) | ||

|24 | |24 | ||

|32 | |32 | ||

| Line 76: | Line 76: | ||

|Karle | |Karle | ||

|AT | |AT | ||

| | |Lustre FS | ||

| | |GPFS FS (GS) | ||

|1 | |1 | ||

|36 | |36 | ||

| Line 88: | Line 88: | ||

|Appel | |Appel | ||

|AT | |AT | ||

| | |Lustre FS | ||

| | |GPFS FS (GS) | ||

|1 | |1 | ||

|384 | |384 | ||

| Line 100: | Line 100: | ||

|Cryo | |Cryo | ||

|AT | |AT | ||

| | |Lustre FS | ||

| | |GPFS FS (GS) | ||

|1 | |1 | ||

|40 | |40 | ||

Revision as of 18:20, 10 September 2023

File:CUNY-HPCC-HEADER-LOGO.jpg

The City University of New York (CUNY) High Performance Computing Center (HPCC) is located on the campus of the College of Staten Island, 2800 Victory Boulevard, Staten Island, New York 10314. The CUNY-HPCC supports computational research and computational intensive courses offered at all CUNY colleges in fields such as Computer Science, Engineering, Bioinformatics, Chemistry, Physics,Materials Science, Genetics, Computational Biology, Finance and others. HPCC provides educational outreach to local schools and supports undergraduates who work in the research programs of the host institution (REU program from NSF). The primary mission of HPCC is:

- To enable advanced research and scholarship at CUNY colleges by providing faculty, staff, and students with access to high-performance computing, adequate storage resources and visualization resources;

- To provide CUNY faculty and their collaborators at other universities, CUNY research staff and CUNY graduate and undergraduate students with expertise in scientific computing, parallel scientific computing (HPC), software development, advanced data analytics, data driven science and simulation science, visualization, advanced database engineering, and others.

- Leverage the HPC Center capabilities to acquire additional research resources for CUNY faculty, researchers and students in existing and major new programs.

- Create opportunities for the CUNY research community to win grants from national funding institutions and to develop new partnerships with the government and private sectors.

Research Computing Infrastructure

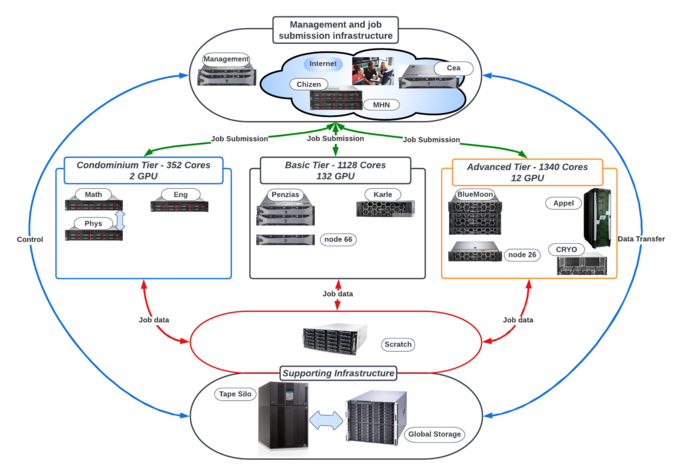

The research computing infrastructure is depicted in the figure on right. In order to support various types of research projects the CUNY-HPCC support variety of computational architectures. All computational resources are organized in 3 tiers - Condominium Tier (CT), Free Tier (FT) and Advanced Tier (AT). Servers in FT and AT are attached to 2 separate file systems /scratch and Global Storage (GS) - /global/u/, while the Arrow and servers in CT are attached to independent hybrid parallel file system (HPFS - not shown). Servers in CT and Arrow the /scratch and /global/u are placed on different tiers of the same HPFS. Currently, only data on GS are protected by backup. The HPFS is not connected to backup tape silo, due to lack of funding.

Run jobs on HPCC resources

Despite of a tier and file system used all jobs at HPCC must:

- Start from user's directory on scratch file system - /scratch/<userid> . Jobs cannot be started from users home directories - /global/u/<userid>

- Use SLURM job submission system (job scheduler) . All jobs submission scripts written for other job scheduler(s) (i.e. PBS pro) must be converted to SLURM syntax.

All usefull users' data must be kept in user's home directory on /global/u/<userid>. For CT servers and for Arrow the user's home directories reside on HPFSS while for servers in AT and FT the data will be stored on older GS. Note that GS and HPFS file systems are not connected. Data on any /scratch file system can be purged at any time nor are protected by tape backup. The /scratch file system is mounted on login nodes and on all computational nodes. Consequently, jobs can be started only from /scratch directory and never from /global/u/<userid> directory. It is important to remember that /scratch is not main storage for users' accounts (home directories), but a temporary storage used for job submission only. Thus:

- data in /scratch are not protected, preserved or backup-ed and can be lost at any time. CUNY-HPCC has no obligation to preserve user data in /scratch.

- /scratch undergoes regular and automatic file purging when either or both conditions are satisfied:

- load of the /scratch file system reaches 71%.

- there is/are inactive file(s) older than 60 days.

- only data in /global/u/<user_id> are protected and recoverable.

- data in HPFS are nor recoverable both in /scratch and /global/u/<user_id>

Upon registering with HPCC every user will get 2 directories:

- • /scratch/<userid> – this is temporary workspace on the HPC systems

- • /global/u/<userid> – space for “home directory”, i.e., storage space on the DSMS for program, scripts, and data

- • In some instances a user will also have use of disk space on the DSMS in /cunyZone/home/<projectid> (IRods).

The /global/u/<userid> directory has quota (see below for details) while the /scratch/<userid> do not have. However the /scratch space is cleaned up following the rules described below. There are no guarantees of any kind that files in /scratch will be preserved during the hardware crashes or cleaning up. Access to all HPCC resources is provided by bastion host called 'chizen. The Data Transfer Node called Cea allows file transfer from/to remote sites directly to/from /global/u/<userid> or to/from /scratch/<userid>

| Server | Tier | Scratch | Data Storage | Number of nodes | Cores per node | Memory per node | GPU per node | Total cores/cluster | Total GPU per cluster | Interconnect |

|---|---|---|---|---|---|---|---|---|---|---|

| Penzias | FT | Lustre FS | GPFS FS (GS) | 60 | 16 | 64 GB | 2 x K20m/5GB PCI 2 | 960 | 120 | IB - 40 Gbps |

| Blue Moon | AT | Lustre FS | GPFS FS (GS) | 24 | 32 | 192 GB | 2 x V100/16 GB PCI 3 | 832 | 4 | IB - 100 Gbps |

| Karle | AT | Lustre FS | GPFS FS (GS) | 1 | 36 | 768 GB | none | 36 (72 hyperthread) | none | IB - 40 Gbps |

| Appel | AT | Lustre FS | GPFS FS (GS) | 1 | 384 | 11 TB | none | 384 | none | IB - 40 Gbps |

| Cryo | AT | Lustre FS | GPFS FS (GS) | 1 | 40 | 1,5 TB | 8 x V100/32GB SXM | 40 | 8 | IB - 40 Gbps |

| Arrow | AT | HPFS NVMe | HPFS spin | 2 | 128 | 512 GB | 8 X A100/80GB | 128 | 16 | IB - 200 Gbps |

| Math | CT | HPFS NVMe | HPFS spin | 1 | 128 | 512 GB | 2 X A40/48GB | 128 | 2 | B - 200 Gbps |

| Phys | CT | HPFS NVMe | HPFS spin | 1 | 64 | 512 GB | none | 64 | none | B - 200 Gbps |

| Chem | CT | HPFS NVMe | HPFS spin | 2 | 64 | 512 GB | 2 x A30/48GB PCI 3 + 8 x A100/40GB SXM | 192 | 10 | B - 200 Gbps |

| ASRC | CT | HPFS NVMe | HPFS spin | 1 | 64 | 512 GB | 2 x A30/24GB | 64 | 2 | B - 200 Gbps |

| Eng | CT | HPFS NVMe | HPFS spin | 1 | 128 | 512 GB | 1 x A40/48GB | 128 | 1 | B - 200 Gbps |

The computational architectures participating in each tier are discussed below. All tiers access 2 separate file systems: 1) Global Storage (GS) (a global file system) and 2) /scratch file system. The GS is mounted only on login nodes and is proposed to keep user data (home directories) and project data (project directories). The /scratch file system is mounted on login nodes and on all computational nodes (in all tiers). Thus GS holds long lasting user data (executables, scripts and data), while /scratch is used to hold provisional data required by particular simulation(s). Consequently, jobs can be started only from /scratch and never from GS storage. It is important to remember that /scratch is not main storage for users' accounts (home directories), but a temporary storage used for job submission only. Thus:

- data in /scratch are not protected, preserved or backup-ed and can be lost at any time. CUNY-HPCC has no obligation to preserve user data in /scratch.

- /scratch undergoes regular file purging when either or both conditions are satisfied:

- load of the /scratch file system reaches 71%.

- there is/are inactive file(s) older than 60 days.

- only data in GS are protected and recoverable.

Upon registering with HPCC every user will get 2 directories:

- • /scratch/<userid> – this is temporary workspace on the HPC systems

- • /global/u/<userid> – space for “home directory”, i.e., storage space on the DSMS for program, scripts, and data

- • In some instances a user will also have use of disk space on the DSMS in /cunyZone/home/<projectid> (IRods).

The /global/u/<userid> directory has quota (see below for details) while the /scratch/<userid> do not have. However the /scratch space is cleaned up following the rules described below. There are no guarantees of any kind that files in /scratch will be preserved during the hardware crashes or cleaning up. Access to all HPCC resources is provided by bastion host called 'chizen. The Data Transfer Node called Cea allows file transfer from/to remote sites directly to/from /global/u/<userid> or to/from /scratch/<userid>

Condominium Tier

Condominium tier (called condo) organizes resources purchased and owned by faculty, but maintained by HPCC. The participation in this tier is strictly voluntary. Several faculty/research groups can combine finds to purchase and consequently share the hardware (a node or several nodes). All nodes in this tier must meet certain hardware specifications including to be fully warranted for time life of the node(s) in order to be accepted. If you want to participate in condominium please sent a request mail to hpchelp@csi.cuny.edu and consult HPCC before making a purchase. Condominium tier:

- Promotes vertical and horizontal collaboration between research groups;

- Makes possible to utilize small amounts of research money or "left-over" money wisely and to obtain advanced resources;

- Helps researchers to conduct large scope high quality research including collaborative projects leading to successful grants with high impact.

Access to condo resources

The resources are available only for condo owners and their groups. The users registered with condo must use the main login node of Arrow server. To access their own node the condo users must specify their own private partition. In addition there are partitions which operate over two or more nodes owned by condo members. Condo users may us (private partition) to access their node. he condo tier members benefit from professional support from HPCC security and maintenance. Upon approval (from the node owner) any idle node(s) can be used by any other member(s). For instance a member can borrow (for agreed time) node with more advanced GPUs than those installed on his/her own node(s). The owners of the equipment are responsible for any repair costs for their node(s). Other users may rent any of the described below condo resources if agreed with owners. The unused cycles can be shared with other members of the community.

In sum the benefits of condo are:

- 5 year lifecycle - condo resources will be available for a duration of 5 years.

Access to more cpu cores than purchased and access to resources which are not purchased.

- Support - HPCC staff will install, upgrade, secure and maintain condo hardware throughout its lifecycle.

- Access to main application server

- Access to HPC analytics

Responsibilities of condo memnbers

- To share their resources (when idle or partially available) with other members of a condo;

- To include in their research and instrumentation grants money for computing used to cover operational expences (non-tax-levy expenses) of the HPCC.

The table below summarized the available resources

| Number of nodes | Cores/node | Chip | Memory/node | GPU/node | Interconnect | Use | Private partition |

|---|---|---|---|---|---|---|---|

| 2 | 64 | 2 x AMD EPYC | 256 GB | 2 x A30 24 GB, PCIe gen 3 | 100 Gbps Infiniband EDR | Number Crunching | parchem, partasrc |

| 1 | 64 | 2 x AMD EPYC | 512 GB | -- | 100 Gbps infiniband EDR | Number Crunching | parthphys |

| 2 | 128 | 2 x AMD EPYC | 512 GB | 2 x A40 48 GB, PCIe gen 3 | 100 Gbps Infiniband EDR | Number Crunching | parting, partmath |

| 1 | 128 | 2 x AMD EPYC | 512 GB | 8 x A100 40GB, SXM | 200 Gbps Infiniband HDR | Number Crunching | parched |

Advanced Tier

The advanced tier holds the resources used for more advanced or large scale research. This tier provides nodes with Volta class GPUs with 16 GB and 32 GB on board. The table below summarizes the resources.

| Number of Nodes | Cores/node | Chip | Memory/node | GPU/node | Interconnect | Use | Association |

|---|---|---|---|---|---|---|---|

| 2 | 32 | 2 x Intel X86_64 | 192 GB | 2 x V100 (16 GB) PCIe gen 3 | 100 Gbps Infiniband EDR | Number Crunching | Blue Moon Cluster |

| 24 | 32 | 2 x Intel X86_64 | 192 GB | -- | 100 Gbps Infiniband EDR | Number Crunching | Blue Moon Cluster |

| 1 | 40 | 2 x Intel X86_64 | 1500 GB | 8 x V100 (32 GB) SXM | 100 Gbps Infiniband EDR | Number Crunching | Cryo |

| 1 | 384 | 2 x Intel X86_64 | 11,000 GB | -- | 56 Gbps Infiniband QDR | Number Crunching | Appel |

Basic tier

The basic tier provides resources for sequential and moderate size parallel jobs. The openMP jobs can be run only in a scope of single node. The distributed parallel jobs (MPI) can be run across cluster. This tier alsop support Matlab Parallel server which can be run across nodes. The users also can run GPU enabled jobs since this tier has 132 GPU Tesla K20m. Please note that these GPU are not supported by NVIDIA anymore. Many applications also may not support this GPU as well. The table below summaries the resources for their tier.

| Number of nodes | Cores/node | Chip | Memory/node | GPU/node | Interconnect | Use | Association |

|---|---|---|---|---|---|---|---|

| 66 | 16 | 2 x Intel X86_64 | 64 GB | 2 x K20m, PCIe.2 | 56 Gbps Infiniband | Number crunching, Sequential jobs, General computing, Distributed Parallel Matlab | Penzias |

| 1 | 36/72* | 2 x Intel X86_64 | 768 GB | -- | 56 Gbps Infiniband | Visualization, Matlab, Parallel Matlab (toolbox) | Karle |

* Hyperthread

Arrow cluster and hybrid storage (NSF grant 2023 equipment)

This equipment consist of large hybrid parallel file system and 2 computational nodes integrated in a cluster named Arrow. The file system has capacity of 2PB (Petabytes) and bandwidth of 35 GBps write and 50 GBps read. The computational nodes details are summarized in table below.

| Number of Nodes | Cores/node | GPU/node | Memory/node | Chip | Interconnect | Use | Association |

|---|---|---|---|---|---|---|---|

| 2 | 128 | 8 x A100/80GB | 1024 GB | 2 x AMD EPYC | HDR 100 Gbps | Molecular modeling, Data science, Number Crunching, Materials Science, AI, ML | Arrow |

HPC systems and their architectures

The HPC Center operates variety of architectures in order to support complex and demanding workflows. The deployed systems include: distributed memory (also referred to as “cluster”) computers, symmetric multiprocessor (also referred as SMP) and shared memory (also reffred as NUMA machines).

Computational Systems:

SMP servers have several processors (working under a single operating system) which "share everything". Thus all cpu-cores allocate a common memory block via shared bus or data path. SMP servers support all combinations of memory VS cpu (up to the limits of the particular computer). The SMP servers are commonly used to run sequential or thread parallel (e.g. OpenMP) jobs and they may have or may not have GPU. Currently, HPCC operates several detached SMP servers named Math, Cryo and Karle. Karle is a server which does not have GPU and is used for visualizations, visual analytics and interactive MATLAB/Mathematica jobs. Math is a condominium server without GPU as well. Cryo (CPU+GPU server) is specialized server with eight (8) NVIDIA V100 (32G) GPU designed to support large scale multi-core multi-GPU jobs.

Cluster is defined as a single system comprizing a set of SMP servers interconnected with high performance network. Specific software coordinates programs on and/or across those in order to perform computationally intensive tasks. Each SMP member of the cluster is called a node. All nodes run independent copies of the same operating system (OS). Some or all of the nodes may incorporate GPU. The main cluster at HPCC is a hybrid (CPU+GPU) cluster called Penzias. Sixty six (66) of Penzias nodes have 2 x GPU K20m, and the 3 fat nodes (nodes with large number of CPU-cores and memory) of the cluster do not have GPU. In addition HPCC operates the cluster Herbert dedicated only to education.

Distributed shared memory computer is tightly coupled server in which the memory is physically distributed, but it is logically unified as a single block. The system resembles SMP, but the number of cpu cores and the amounts of memory possible is far beyond limitations of the SMP. Because the memory is distributed, the access times across address space are non-uniform. Thus, this architecture is called Non Uniform Memory Access (NUMA) architecture. Similarly to SMP, the NUMA systems are typically used for applications such as data mining and decision support system in which processing can be parceled out to a number of processors that collectively work on a common data. HPCC operates the NUMA server called Appel. This server does not have GPU.

Infrastructure systems:

o Master Head Node (MHN) is a redundant login node from which all jobs on all servers start. This server is not directly accessible from outside CSI campus.

o Chizen is a redundant gateway server which provides access to protected HPCC domain.

o Cea is a file transfer node allowing transfer of files between users’ computers to/from /scratch space or to/from /global/u/<usarid>. Cea is accessible directly (not only via Chizen), but allows only limited set of shell commands.

Table 1 below provides a quick summary of the attributes of each of the systems available at the HPC Center.

| System | Type | Type of Jobs | Number of Nodes | Cores/node | Chip Type | GPU/node | Memory/node (GB) |

|---|---|---|---|---|---|---|---|

| Penzias | Hybrid Cluster | Sequential and parallel jobs with/without GPU | 66 | 16 | 2 x Intel Sandy Bridge EP 2.20 GHz | 2 x K20M (5 GB on board), PCIe 2.0 | 64 GB |

| Blue Moon | Hybrid cluster | Sequential and parallel jobs with/without GPU | 24 (CPU) & 2 (CPU + GPU) | 32 | 2 x Intel Skylake 2.10 GHz | 2 x V100 (16 GB on board), PCIe 3.0 | 192 GB |

| Cryo | SMP | Sequential and parallel jobs with/without GPU | 1 | 40 | 2 x Intel Skylake 2.10 GHz | 8 x V100 (32 GB on board), XSM | 1,500 GB |

| Appel | NUMA | Massive parallel and/or big data jobs without GPU | 1 | 384 | 2 x Intel Ivy Bridge 3.0 GHz | -- | 11,000 GB |

| Fat node 1 | Part of Penzias | Big data jobs without GPU | 1 | 24 | 2 x Intel Sandy Bridge 2.30 GHz | -- | 768 GB |

| Fat node 2 | Part of Penzias | Big data jobs without GPU | 1 | 24 | 2 x Intel Sandy Bridge 2.30 GHz | -- | 1500 GB |

| Fat node 3 | Part of Penzias | Big data jobs without GPU | 1 | 32 | 2 x Intel Haswell 2.30 GHz | -- | 768 GB |

| Condo 1 | Part of Condo | Sequential and parallel jobs with/without GPU | 1 | 64 | 2 x AMD EPYC Roma, 2.10 GHz | 2 x A30 | 256 GB |

| Condo 2 | Part of Condo | Sequential and parallel jobs with/without GPU | 1 | 64 | 2 x AMD EPYC Roma, 2.10 GHz | 2 x A30 | 256 GB |

| Condo 3 | Part of Condo | Sequential and parallel jobs with/without GPU | 1 | 128 | 2 x AMD EPYC Roma, 2.10 GHz | 1 x A40 | 512 GB |

| Condo 4 | Part of Condo | Sequential and parallel jobs with/without GPU | 1 | 128 | 2 x AMD EPYC Roma, 2.10 GHz | 2 x A40 | 512 GB |

| Condo 5 | Part of Condo | Sequential and parallel jobs with/without GPU | 1 | 64 | 2 x AMD EPYC Roma, 2.10 GHz | -- | 512 GB |

| Karle | SMP | Visualization, Matlab, Mathematica | 1 | 72* | 2 x Intel Sandy Bridge 2.30 GHz | -- | 768 GB |

| Chizen | SMP | Gateway only | 1 (redundant) | -- | -- | -- | 64 GB |

| MHN | SMP | Master Head Node | 2 (redundant) | -- | -- | -- | -- |

| Cea | SMP | File Transfer Node | 1 | -- | -- | -- | -- |

* hyperthread

Partitions and jobs

The only way to submit job(s) to HPCC servers is through SLURM batch system. Any job despite of its type (interactive, batch, serial, parallel etc.) must be submitted via SLURM. The latter allocates the requested resources on proper server and starts the job(s) according to predefined strict fair share policy. Computational resources (cpu-cores, memory, GPU) are organized in partitions. The main partition is called production. This is routing partition which distributes the jobs in several sub-partitions depend on job’s requirements. Thus the serial job submitted in production will land in partsequential partition. No SLURM Pro scripts should be ever used and all existing SLURM scripts must be converted to SLURM before use. The table below shows the limitations of the partitions.

| Partition | Max cores/job | Max jobs/user | Total cores/group | Time limits |

|---|---|---|---|---|

| production | 128 | 50 | 256 | 240 Hours |

| partedu | 16 | 2 | 216 | 72 Hours |

| partmath | 128 | 128 | 128 | 240 Hours |

| partmatlab | 1972 | 50 | 1972 | 240 Hours |

| partdev | 16 | 16 | 16 | 4 Hours |

o production is the main partition with assigned resources across all servers (except Math and Cryo).It is routing partition so the actual job(s) will be placed in proper sub-partition automatically. Users may submit sequential, thread parallel or distributed parallel jobs with or without GPU.

o partedu partition is only for education. Assigned resources are on educational server Herbert. Partedu is accessible only to students (graduate and/or undergraduate) and their professors who are registered for a class supported by HPCC. Access to this partition is limited by the duration of the class.

o partmatlab partition allows to run MATLAB's Distributes Parallel Server across main cluster. Note however that parallel toolbox programs can be submitted via production partition, but only as thread parallel jobs.

o partdev is dedicated to development. All HPCC users have access to this partition with assigned resources of one computational node with 16 cores, 64 GB of memory and 2 GPU (K20m). This partition has time limit of 4 hours.

Hours of Operation

The second and fourth Tuesday mornings in the month from 8:00AM to 12PM are normally reserved (but not always used) for scheduled maintenance. Please plan accordingly.

Unplanned maintenance to remedy system related problems may be scheduled as needed. Reasonable attempts will be made to inform users running on those systems when these needs arise.

User Support

Users are encouraged to read this Wiki carefully. In particular, the sections on compiling and running parallel programs, and the section on the SLURM batch queueing system will give you the essential knowledge needed to use the CUNY HPC Center systems. We have strived to maintain the most uniform user applications environment possible across the Center's systems to ease the transfer of applications and run scripts among them.

The CUNY HPC Center staff, along with outside vendors, offer regular courses and workshops to the CUNY community in parallel programming techniques, HPC computing architecture, and the essentials of using our systems. Please follow our mailings on the subject and feel free to inquire about such courses. We regularly schedule training visits and classes at the various CUNY campuses. Please let us know if such a training visit is of interest. In the past, topics have include an overview of parallel programming, GPU programming and architecture, using the evolutionary biology software at the HPC Center, the SLURM queueing system at the CUNY HPC Center, Mixed GPU-MPI and OpenMP programming, etc. Staff has also presented guest lectures at formal classes throughout the CUNY campuses.

If you have problems accessing your account and cannot login to the ticketing service, please send an email to:

hpchelp@csi.cuny.edu

Warnings and modes of operation

1. hpchelp@csi.cuny.edu is for questions and accounts help communication only and does not accept tickets. For tickets please use the ticketing system mentioned above. This ensures that the person on staff with the most appropriate skill set and job related responsibility will respond to your questions. During the business week you should expect a 48h response, quite often even same day response. During the weekend you may not get any response.

2. E-mails to hpchelp@csi.cuny.edu must have a valid CUNY e-mail as reply address. Messages originated from public mailers (google, hotmail, etc) are filtered out.

3. Do not send questions to individual CUNY HPC Center staff members directly. These will be returned to the sender with a polite request to submit a ticket or email the Helpline. This applies to replies to initial questions as well.

The CUNY HPC Center staff members are focused on providing high quality support to its user community, but compared to other HPC Centers of similar size our staff is extremely lean. Please make full use of the tools that we have provided (especially the Wiki), and feel free to offer suggestions for improved service. We hope and expect your experience in using our systems will be predictably good and productive.